Network Infrastructure Visibility and Analytics with Data Streaming

Given all the emphasis today on Data Analytics for driving business growth and increasing business efficiency, it is no surprise that data streaming is everywhere in the news. In this blog we explore the ins and outs of this data streaming and the benefits it offers your organization’s day-to-day operations.

So, What is Data Streaming and Why Does it Matter?

Data streaming is the mechanism by which you can collect comprehensive real time data from your applications and infrastructure, for analysis by various tools and analytics software. While there is a lot of excitement about various machine learning algorithms and applications, it is important to remember that they are only as good as the data available for learning.

Why Must the Data be Real Time?

Thus, access to real-time data (via streaming) is a fundamental requirement for these new and evolving technology and application ecosystems.

You need real-time data to show you what’s happening in your infrastructure across compute, storage and network domains. You can then massage and use that data in many useful ways for ongoing monitoring, analysis, and remediation:

- Monitoring helps you check network availability, performance, and latency to ensure you can deliver the network Service Level Agreement (SLA).

- You can also perform analysis on the collected data to either optimize network efficiency or to expand the network to meet growth aspirations.

- Finally, the data can be analyzed to detect various types of events and failures happening in your network and perform automatic actions for remediation. This can help improve network uptime and assist with seamless network expansion.

Advantage over Traditional Techniques

Traditional techniques such as SNMP are not suited to real time continuous transfer since an SNMP Manager has to continuously poll devices for information. Another traditional tool is Syslog. Both of these are still quite useful, but they are quite coarse in terms of time granularity, and protocols like SNMP are “pull” based–the tool has to collect data at regular time intervals.

Introducing gRPC

A new method for data streaming is gRPC. gRPC is an open source Remote Procedure Call (RPC) platform developed by Google. With gRPC, data transfer is periodically initiated by devices and is extremely efficient due to how data is packed by gRPC into a packet (Figure 1).

Figure 1: gRPC Bi-Directional Streaming

Data Streaming in Operation

Ongoing monitoring of a data center network is typically done by the operations team. In particular, streaming involves continuous periodic transfer of information from the monitored entity (e.g., a network switch or router).

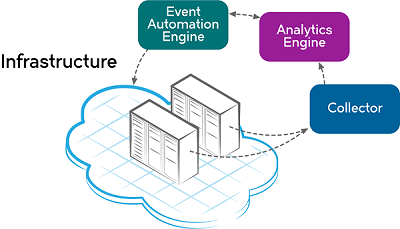

This information is usually transmitted from the monitored entity via a “push” model, wherein the monitored entity periodically sends data to a receiving entity called a collector. Figure 2 illustrates the complete data streaming ecosystem.

Figure 2: Data Streaming Ecosystem

The collector can feed the data into an analytics engine, such as Splunk or Apache Spark, which can then provide visualization or business insights. The analytics engine can also detect events and drive an event-based automation engine, such as Extreme Workflow Composer, a DevOps-inspired platform powered by StackStorm, for automated actions on the infrastructure.

Key Attributes of Data Streaming

There are multiple attributes relevant to data streaming:

- Time interval: this is the interval at which you can stream data. Typically, this is configurable separately for various data attributes. Based on the use case, certain attributes can be monitored on a more frequent basis compared to others. This may be desirable because streaming data too frequently can put a significant burden on the CPU of a network switch/router and will also result in a very high data storage overhead.

- Type of data being provided (data model): this defines data attributes being streamed. Examples include interface statistics, system and buffer usage, etc.

- Encapsulation of data being streamed: this could be in user readable JSON format or a bandwidth optimized binary format such as GPB ( Google Protocol Buffers )

- The protocol used for data transfer to the collector: this could be TCP or UDP or gRPC

Data Streaming on Extreme SLX platforms

The SLX platforms from Extreme provide robust capabilities for data streaming. Data streaming is delivered via the gRPC or TCP/UDP protocols. The data model is represented in YANG format and the data encoding is done via GPB (Google Protocol Buffer) format. Note that you can use a GPB to JSON decoder to get data in JSON format. The granularity is configurable.

There are two models of streaming; data can be streamed to a collector, or a gRPC client can request data which is then pushed at desired intervals to the client.

In the collector model shown in Figure 2, the switch acts as a client and streams data to the desired collector over TCP. Multiple collectors can be configured on the switch at any given time. The information to be configured for each collector includes a specific IP address and TCP/UDP port for connectivity, the data streaming interval, and a telemetry profile to identify which data to stream to the collector.

In the second model, a gRPC client can initiate a connection to the switch, which then acts as a gRPC server. The client specifies the telemetry profile and the switch streams data to the client. The client can be written in any language supported for gRPC (e.g. Java, Python, Ruby, and Go). The YANG model is converted to a proto file which can be used to generate the client stub code.

Finally, an SSL certificate can also be configured on the switch to enable streaming on a secure channel.

Building a Complete Data Streaming Solution

There is tremendous industry momentum currently to enhance business operations and growth using data analytics and machine learning technologies and solutions; built on a foundation of real time data streamed from applications and infrastructure. Data streaming from the Extreme SLX platforms can help enterprises and cloud providers build a complete solution for data analysis and automation.

In our next blog, we will look at a couple of demos and examples, including how to build a data search and visualization solution using Splunk. Additionally, we’ll look at how Extreme Workflow Composer can be used to provide event-based automation/remediation where events are detected and triggered by an analytics platform.

Explore how data streaming can help in day-to-day operations by following the links above or working with your Extreme representative.

How are you using data streaming in your organization? Leave a comment.

The post Network Infrastructure Visibility and Analytics with Data Streaming authored by Deepak Patil appeared first on Brocade.

This post was originally published by Product Marketing Director Alan Sardella.